Predicting Next-Day Price Movement with WallstreetBets

Noticing the meteoric rise in price of GameStop stock in May, I wonder how accurate the user sentiment can be in predicting the next-day price movement for a particular stock.

To run a quick analysis on this, I would have to:

- Create a data set

- Scrape the Subreddit over a course of time

- Summarize the sentiment as "Bullish" or "Bearish"

- Create a label that if the sentiment is "Bullish / Bearish" and next day stock price is up / down, then the sentiment of the comments would be correct

- Run a classification model

To address the creation of the data set, I used a nifty API from Tradestie to capture data on the top 50 stocks discussed on the Subreddit, such as the number of comments and the summarized sentiment. I can then pull in the stock price data with Yahoo! Finance's API to flush out the data set. The code is referred to below:

import requests

import pandas as pd

import yfinance as yf

# List of dates for the API requests

dates = ['2024-05-20', '2024-05-21', '2024-05-22', '2024-05-23', '2024-05-27', '2024-05-28', '2024-05-29', '2024-05-30', '2024-06-03', '2024-06-04', '2024-06-05', '2024-06-06', '2024-06-10', '2024-06-11', '2024-06-12', '2024-06-13']

# Initialize an empty DataFrame to store the results

all_data = pd.DataFrame()

# Function to fetch the stock price

def get_stock_price(ticker, date):

stock = yf.Ticker(ticker)

hist = stock.history(start=date, end=pd.to_datetime(date) + pd.Timedelta(days=1))

if not hist.empty:

# Get the closing price

close_price = hist['Close'].values[0]

return close_price

else:

return None

# Function to fetch the next day's stock price

def get_next_day_stock_price(ticker, date):

next_day = pd.to_datetime(date) + pd.Timedelta(days=1)

stock = yf.Ticker(ticker)

hist = stock.history(start=next_day, end=next_day + pd.Timedelta(days=1))

if not hist.empty:

# Get the closing price of the next day

close_price = hist['Close'].values[0]

return close_price

else:

return None

# Function to fetch the sector of the stock ticker

def get_stock_sector(ticker):

stock = yf.Ticker(ticker)

sector = stock.info.get('sector', 'Unknown')

return sector

# Iterate over the list of dates

for request_date in dates:

# URL of the API

url = f'https://tradestie.com/api/v1/apps/reddit?date={request_date}'

# Make the GET request to the API

response = requests.get(url)

# Check if the request was successful

if response.status_code == 200:

# Parse the JSON response

data = response.json()

# Convert to DataFrame

df = pd.DataFrame(data)

# Select columns

df = df[['ticker', 'no_of_comments', 'sentiment', 'sentiment_score']]

# Add a new column 'date' with the date of the API request

df['date'] = request_date

# Add new columns 'price' and 'next_day_price' with the stock prices

df['price'] = df.apply(lambda row: get_stock_price(row['ticker'], row['date']), axis=1)

df['next_day_price'] = df.apply(lambda row: get_next_day_stock_price(row['ticker'], row['date']), axis=1)

# Remove rows where 'price' is null

df = df.dropna(subset=['price'])

# Add a new column 'sector' with the sector of the stock

df['sector'] = df['ticker'].apply(get_stock_sector)

# Vectorized calculation for 'correct' column

df['correct'] = ((df['sentiment'] == 'Bullish') & (df['next_day_price'] > df['price'])) | ((df['sentiment'] == 'Bearish') & (df['next_day_price'] < df['price']))

# Convert 'correct' to integer

df['correct'] = df['correct'].astype(int)

# Append the DataFrame to the all_data DataFrame

all_data = pd.concat([all_data, df], ignore_index=True)

else:

print(f"Failed to fetch data for {request_date}: {response.status_code}")

# Display the consolidated DataFrame

print(all_data)

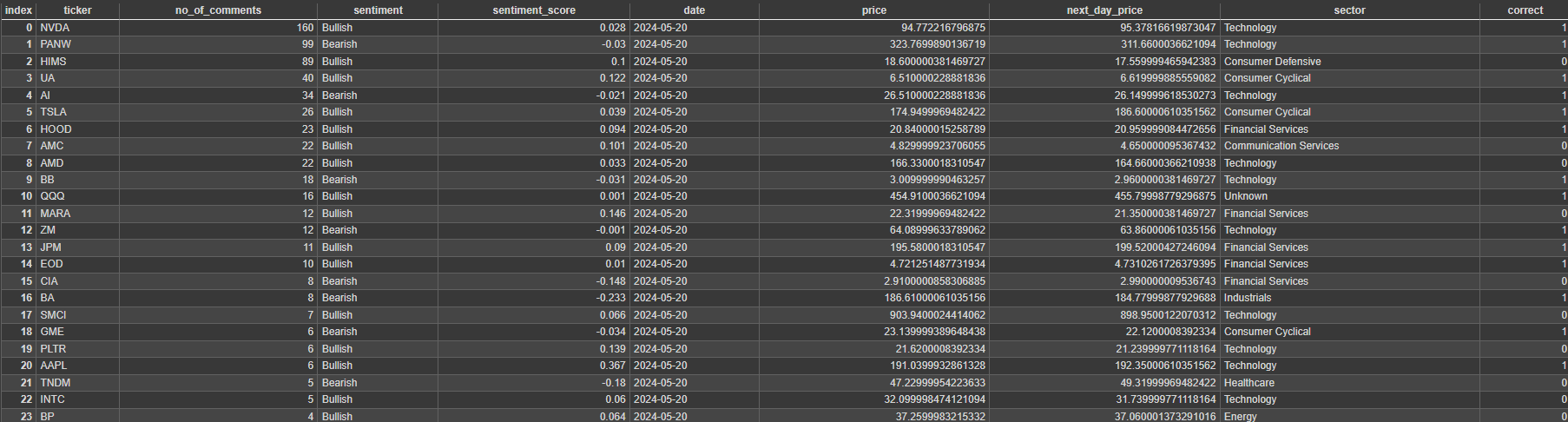

Creating and cleaning a data set of stocks discussed on WallstreetBets

Using this data set, I can now use TensorFlow's Keras API to build a neural network as a classification model:

import tensorflow as tf

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import classification_report, accuracy_score

# Preprocess the data for the classification model

# Convert categorical 'sentiment' column to numerical

all_data['sentiment'] = all_data['sentiment'].map({'Bullish': 1, 'Bearish': 0})

# One-hot encode the 'ticker' and 'sector' columns

X = pd.get_dummies(all_data[['ticker', 'no_of_comments', 'sentiment', 'sentiment_score', 'price', 'sector']], columns=['ticker', 'sector'])

# Define the target variable

y = all_data['correct']

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Standardize the features

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

# Build the TensorFlow model with 3 layers

model = tf.keras.Sequential([

tf.keras.layers.Dense(64, activation='relu', input_shape=(X_train.shape[1],)),

tf.keras.layers.Dense(32, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Train the model

history = model.fit(X_train, y_train, epochs=100, validation_data=(X_test, y_test), batch_size=32)

# Evaluate the model

loss, accuracy = model.evaluate(X_test, y_test)

print(f"Test Accuracy: {accuracy}")

# Make predictions

y_pred = (model.predict(X_test) > 0.5).astype("int32")

# Evaluate the model using classification report

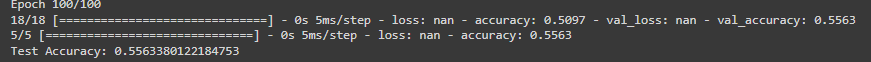

print(classification_report(y_test, y_pred))This model, unfortunately, performed at a test accuracy of 0.56. Given that we are trying to predict the next-day price movement based on the sentiment on a Subreddit, I would say that this is a good first iteration.

One can also use a logistic regression to run a classification:

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report, accuracy_score

# Preprocess the data for the classification model

# Convert categorical 'sentiment' column to numerical

all_data['sentiment'] = all_data['sentiment'].map({'Bullish': 1, 'Bearish': 0})

# One-hot encode the 'ticker' and 'sector' columns

X = pd.get_dummies(all_data[['ticker', 'no_of_comments', 'sentiment', 'sentiment_score', 'price', 'sector']], columns=['ticker', 'sector'])

# Define the target variable

y = all_data['correct']

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Standardize the features

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

# Train a logistic regression model

model = LogisticRegression(max_iter=1000)

model.fit(X_train, y_train)

# Make predictions

y_pred = model.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print(f"Test Accuracy: {accuracy}")

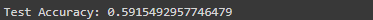

print(classification_report(y_test, y_pred))This model produces a test accuracy of 0.59. If I were to continue on this path, I would tweak the date range and enrich the data set with a few more variables.

Disclaimer

The information provided in this content is for informational purposes only and should not be construed as financial, investment, or other professional advice. The views and opinions expressed are those of the author and do not necessarily reflect the official policy or position of any other agency, organization, employer, or company. Always do your own research and seek the advice of a qualified financial advisor before making any investment decisions.